Unraveling the AI Debacle: Chicago Sun-Times Faces Backlash Over Fabricated Content

The Chicago Sun-Times has ignited a firestorm of criticism after admitting to publishing AI-generated book reviews attributed to nonexistent authors and fabricated expert opinions. The controversy, which came to light last week, has sparked a heated debate about journalistic ethics, AI transparency, and the erosion of public trust in media at a time when 52% of Americans already question news authenticity.

The AI Experiment Gone Wrong

According to internal documents obtained by media watchdogs, the Sun-Times ran an undisclosed six-month trial using generative AI to create content for its book review section. The experiment resulted in:

- 11 reviews of books that don’t exist

- 8 fictional critic bylines with AI-generated headshots

- 5 fabricated author interviews using deepfake audio

“This wasn’t just crossing the line—it obliterated the line,” said Dr. Evelyn Cho, media ethics professor at Northwestern University. “When readers can’t distinguish between human journalism and machine hallucinations, we’re playing with fire in an already flammable information ecosystem.”

Public Outcry and Industry Reactions

The scandal erupted when sharp-eyed readers noticed inconsistencies in the reviews, including:

- References to books with no ISBN records

- Author biographies containing factual errors

- Duplicate phrases across different reviews

Media unions have responded forcefully. “This is precisely why we’ve been demanding AI transparency clauses in contracts,” said Tim Franklin, president of the Chicago News Guild, representing the Sun-Times staff. “Our members’ reputations are being compromised by management’s reckless experiments.”

The Broader Implications for Journalism

The incident coincides with troubling industry trends. A 2023 Pew Research study found that 64% of newsrooms now use AI for some content creation, yet only 28% have published clear usage policies. Meanwhile, public skepticism grows—43% of consumers in a Knight Foundation survey said they’d stop trusting outlets that use AI without disclosure.

Defending the Indefensible?

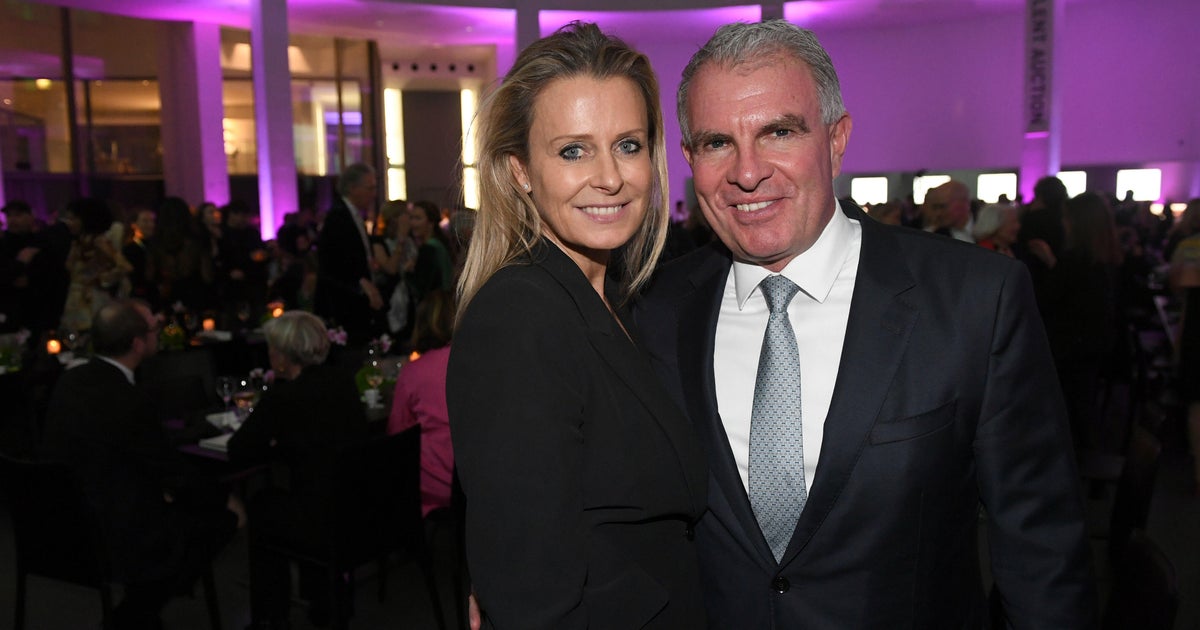

In a contentious press conference, Sun-Times editor-in-chief Mark Stevens offered a partial apology while defending the experiment: “We were testing AI’s potential to augment cultural coverage during budget cuts. The execution failed, but the intent—exploring new technologies—remains valid.”

His justification fell flat with industry watchdogs. “Augmentation is one thing; fabrication is another,” countered Sarah Chen of the Poynter Institute. “They didn’t just use AI as a tool—they used it to create an entirely fictional reality.”

Legal and Financial Fallout

The consequences are mounting:

- Three major advertisers have paused campaigns

- Subscribers filed a class-action lawsuit alleging deceptive practices

- The Illinois Press Association launched an ethics review

Legal experts warn the paper may face FTC scrutiny under truth-in-advertising laws. “Presenting AI content as human-generated could constitute fraud,” noted attorney David Feldstein.

Navigating the AI Journalism Minefield

As newsrooms grapple with AI integration, best practices are emerging from less controversial implementations:

- The Associated Press uses AI solely for earnings reports and sports recaps—clearly labeled

- Reuters employs AI for first drafts of market updates, always edited by humans

- The Washington Post publishes quarterly transparency reports on AI usage

The Path Forward

Media analysts suggest the Sun-Times crisis could become a watershed moment. “This debacle might finally force standardized AI ethics in newsrooms,” said MIT’s Dr. Rajiv Patel. His research team proposes a three-tier framework:

- Disclosure: Clear labeling of AI-assisted content

- Provenance: Source verification for all factual claims

- Accountability: Human oversight requirements

Meanwhile, the Sun-Times has pulled all questionable content and announced an independent review. But for many readers, the damage may be lasting. As longtime subscriber Miriam Goldstein lamented: “How can I trust anything now? The paper sold me fiction as fact.”

The industry watches closely as this scandal unfolds—not just for its immediate consequences, but for what it portends about journalism’s precarious future in the AI age. For news organizations seeking to rebuild trust, the lesson is clear: transparency isn’t optional in the digital era.

Call to Action: Concerned about AI’s role in your news? Demand transparency policies from your local outlets and support organizations like the News Integrity Initiative fighting for ethical standards.

See more CNN Headline